Our full page speed tool has launched! Sitebeacon is available at https://sitebeacon.io.

Google's PageSpeed Insights is a great tool that enables you to get Lighthouse PageSpeed score that includes Overall Score, First Contentful Paint, Speed Index, Time to Interactive, First Meaningful Paint and other useful information concerning the core web vitals performance of a webpage.

But what if you want to bulk check a list of URLs? We've build an App Script and a Google Spreadsheet that you can use to check a list of URLs, whether these are a list of your sites pages or a set of competitive sites, it's there to use how you like.

We have also gone into details in this post about how it was built and the logic, so you can create your own version using Google App Script in Google Sheets.

Executing the automation

PageSpeed API key

To use the Script, you will need to get the Google API key to allow the code to fetch data from the PageSpeed API. In order to acquire the API key and API URL, you need to go to here .

There we need to click “Get A Key”. And you will need to create a project. Then you will get the API key and copy that key.

The google sheet

- Go the the Bulk PageSpeed Google Sheet and click 'Make a copy' from the 'File' menu in the navbar, this will open in a new tab on your browser.

- Replace the URLs in column A (pages) with the list of URLs you want to check.

- Go to the "Extensions" menu from the top navbar and click on the "App Script" option. This will open "App Script" editor on a new browser tab.

- You will need to approve access to the App Script (Go to Bulk_PageSpeed_Automation (unsafe)), we haven't had it approved by Google yet, and if you're worried about security, you can build your own script using the code and process below.

- You need to replace the 'Your API key' in the code with the API key we you got in the earlier step. Please make sure that the API key is inside of the quotes. You can get the API URL in the CURL section as well.

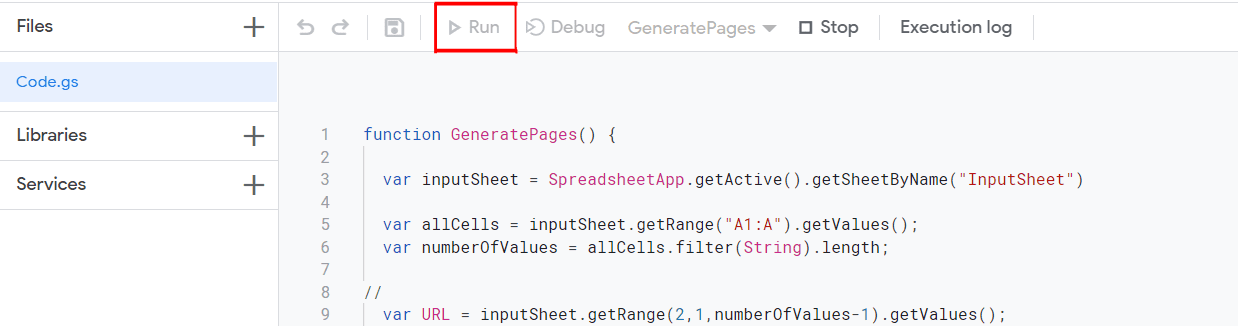

Run the script

Now click on 'Run' to execute the script. It may take a while to execute depending on how many URLs you have added. Once it is finished, two new sheets will be created named 'Mobile' and 'Desktop'. As the names suggest, the mobile sheet contains the mobile site score of each URL, and the Desktop has desktop site score. You also get HTTP response status code of each URLs on the inputSheet as well. The URLs which cannot be analysed (404/500) will appear in red text.

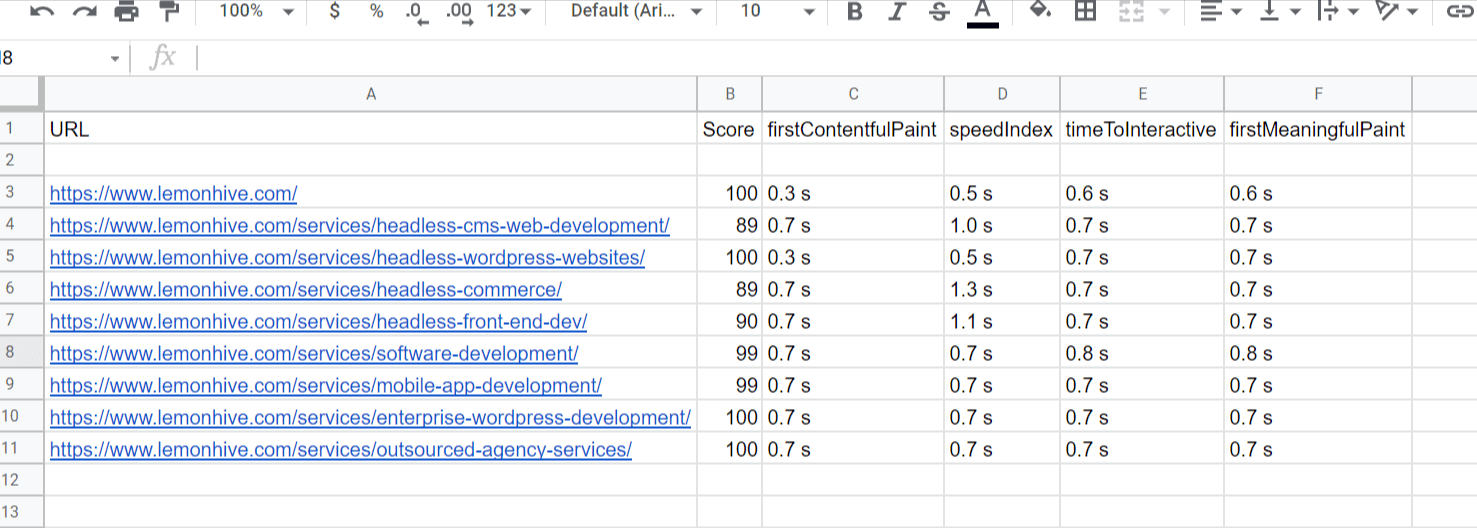

Spreadsheet results

After running the script, you should have two new tabs with the results from the URLs you inputted.

Working methodology

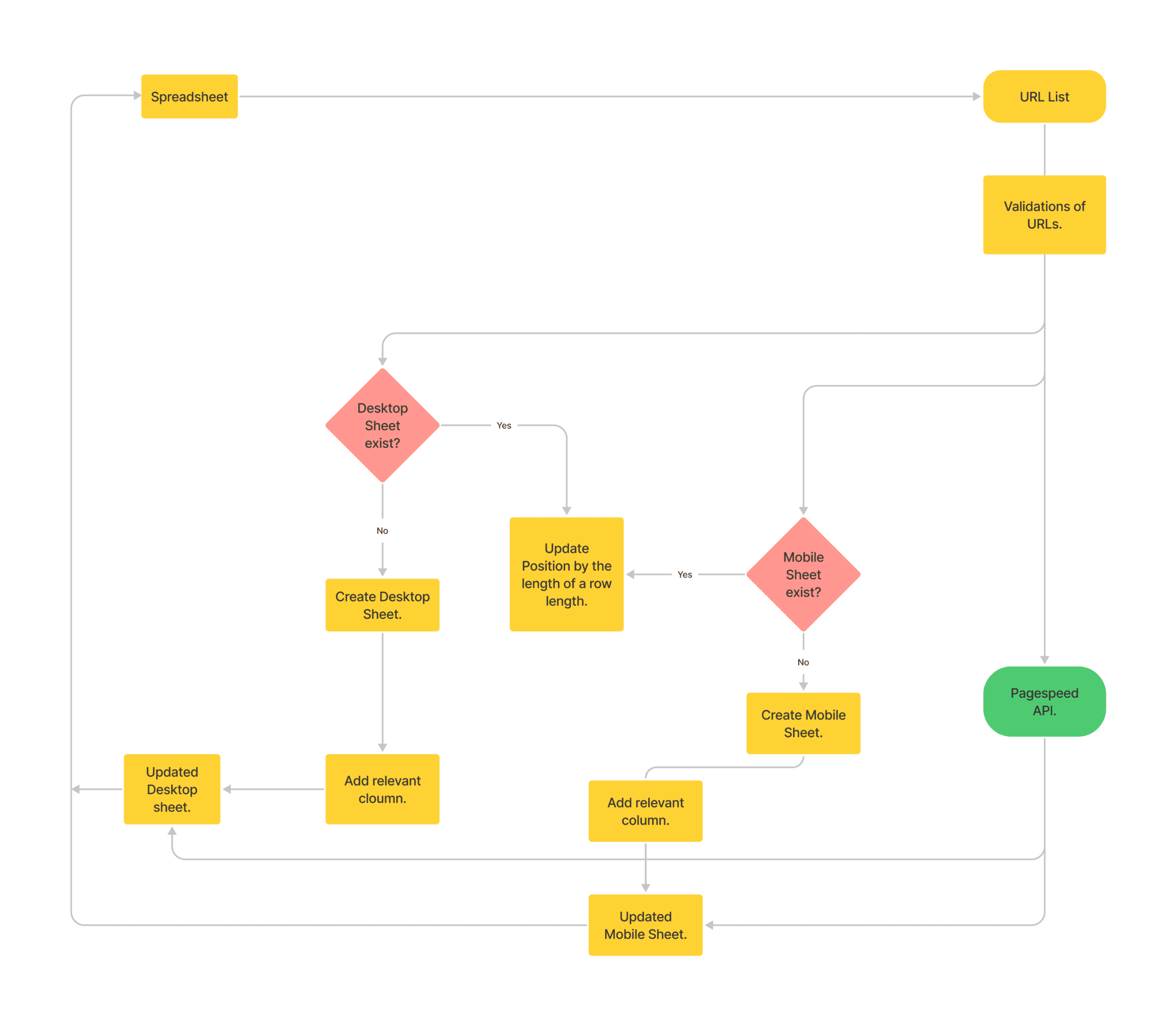

Just because we're a bit geeky, we want to show you how it works, so the diagram below satisfy our need to explain the logic, zoom in to look if you want, or skip to the next section to see how to build your own version.

If you want to build it yourself

1st step

So that two different sheets can be generated where (mobile & desktop), we used the App Script code function GeneratePages(){ }inputSheetStatus . From line 3 to line 6, we get the input from the “”. In line 8, we set the 2nd columns name “”.. From line 3 to line 6, we get the input from the “”. In line 8, we set the 2nd columns name “”.

function GeneratePages() {

var inputSheet = SpreadsheetApp.getActive().getSheetByName("InputSheet")

var allCells = inputSheet.getRange("A1:A").getValues()

var numberOfValues = allCells.filter(String).length

var URL_list = inputSheet.getRange(2,1,numberOfValues-1).getValues()

inputSheet.getRange('B1').setValue("Status")

}2nd step

In this step, we used a function called filter() to accumulate http status of 200 and 301 in the column named “Status” and other http status is set for red text. The value is stored in the variable named valid_URL_list. The entire process of this step is done from line 1 to 16.

var status = getStatusCode(url)

Logger.log(status)

if(status == '200' || status == '301' ){

inputSheet.getRange('B' + cellPos).setValue(status)

return true

}

else{

inputSheet.getRange('B' + cellPos).setFontColor('red')

inputSheet.getRange('B' + cellPos).setValue(status)

return false

}

})

Logger.log(valid_URL_list)The URLs the 1st column will be selected automatically & the range will be selected automatically as well.

We can see this output from the sheets by a simple code “Logger.log (valid_URL_list)”

3rd step

Next is selecting a file and we are checking whether the page “mobile” is present or not by the command " ss.getSheetByName". After that we applied the condition that if the sheet does not exist then create a sheet which is named “mobile” in the spreadsheet. From line 1 to 11 the code for the process was done.

In the lines, we are assigning the value URLs, Score, firstcontentfulPaint, Speed Index, TimetoInteractive, FirstmeaningfulPaint into different columns.

From line 13 to 22 the process was done for “desktop”. The entire ss.getSheetByName

() operates from line 1 to line 22.

var mobileSheet = ss.getSheetByName('Mobile')

if (!mobileSheet) {

mobileSheet = ss.insertSheet("Mobile")

mobileSheet.getRange('A1').setValue("URL")

mobileSheet.getRange('B1').setValue("Score")

mobileSheet.getRange('C1').setValue("firstContentfulPaint")

mobileSheet.getRange('D1').setValue("speedIndex")

mobileSheet.getRange('E1').setValue("timeToInteractive")

mobileSheet.getRange('F1').setValue("firstMeaningfulPaint")

}

var desktpSheet = ss.getSheetByName('Desktop')

if(!desktpSheet){

desktpSheet = ss.insertSheet("Desktop")

desktpSheet.getRange('A1').setValue("URL")

desktpSheet.getRange('B1').setValue("Score")

desktpSheet.getRange('C1').setValue("firstContentfulPaint")

desktpSheet.getRange('D1').setValue("speedIndex")

desktpSheet.getRange('E1').setValue("timeToInteractive")

desktpSheet.getRange('F1').setValue("firstMeaningfulPaint")

}4th step

Now from line 1 to 35, every URL in variable valid_URL_list was checked by the try and catch functions by applying forEach loop. Significance for this try function is to check for validation for each URL and if any invalid URL is found then it is logged by catch function and marked in red text.

valid_URL_list.forEach((url, index) => {

var pos = index+2

try{

var mobileData = fetchDataFromPSI('mobile', url)

Logger.log("Mobile Score: ")

Logger.log(mobileData )

mobileSheet.getRange('A'+ pos).setValue(mobileData.url)

mobileSheet.getRange('B'+ pos).setValue(mobileData.score)

mobileSheet.getRange('C'+ pos).setValue(mobileData.firstContentfulPaint)

mobileSheet.getRange('D'+ pos).setValue(mobileData.speedIndex)

mobileSheet.getRange('E'+ pos).setValue(mobileData.timeToInteractive)

mobileSheet.getRange('F'+ pos).setValue(mobileData.firstMeaningfulPaint)

var desktopData = fetchDataFromPSI('desktop', url)

Logger.log("Desktop Score: ")

Logger.log(desktopData)

desktpSheet.getRange('A'+ pos).setValue(desktopData.url)

desktpSheet.getRange('B'+ pos).setValue(desktopData.score)

desktpSheet.getRange('C'+ pos).setValue(desktopData.firstContentfulPaint)

desktpSheet.getRange('D'+ pos).setValue(desktopData.speedIndex)

desktpSheet.getRange('E'+ pos).setValue(desktopData.timeToInteractive)

desktpSheet.getRange('F'+ pos).setValue(desktopData.firstMeaningfulPaint)

}

catch(error){

Logger.log("invalid URL : " + url)

mobileSheet.getRange('A'+ pos).setFontColor("red")

mobileSheet.getRange('A'+ pos).setValue(url)

desktpSheet.getRange('A'+ pos).setFontColor("red")

desktpSheet.getRange('A'+ pos).setValue(url)

Logger.log(error)

}

})

}5th step

In this step PageSpeedApiEndpointUrl() function was used. This step is crucial since we need to generate the Endpoint by using the API URL and API key.

function PageSpeedApiEndpointUrl(strategy, url) {

const apiBaseUrl = 'https://www.googleapis.com/pagespeedonline/v5/runPagespeed'

const websiteHomepageUrl = url

const apikey = 'AIzaSyC3Rc9Y0zE23Q8cWikYk5DBVtVCJKDNaYA' // Your API key

const apiEndpointUrl = apiBaseUrl + '?url=' + websiteHomepageUrl + '&key=' + apikey + '&strategy=' + strategy

return apiEndpointUrl

}6th step

This is another crucial step where function getStatusCode(url) was used to validate each URLs. Noticeably, try functions were used along with UrlFetchApp to check the validation by trying each URLs and help getting the error to be logged by using catch function. In addition, another function called fetchDataFromPSI was used for validation as well. Thenceforth, we fetched the data in a JSON format and put the value into the endpoint for every URL. This entire process was done from line 1 to 24.

function getStatusCode(url){

var options = {

'muteHttpExceptions': true,

'followRedirects': false

}

try{

var response = UrlFetchApp.fetch(url, options)

}catch(error){

Logger.log(error)

return(error)

}

return response.getResponseCode()

}

function fetchDataFromPSI(strategy, url) {

var options = {

'muteHttpExceptions': true,

}

const PageSpeedEndpointUrl = PageSpeedApiEndpointUrl(strategy, url)

const response = UrlFetchApp.fetch(PageSpeedEndpointUrl, options)

const json = response.getContentText()

const parsedJson = JSON.parse(json)

const lighthouse = parsedJson['lighthouseResult']

7th step

After that we fetched the data in a JSON format and put the value into the endpoint for every URL.

const result = {

'url': url,

'score': lighthouse['categories']['performance']['score']*100,

'firstContentfulPaint': lighthouse['audits']['first-contentful-paint']['displayValue'],

'speedIndex': lighthouse['audits']['speed-index']['displayValue'],

'timeToInteractive': lighthouse['audits']['interactive']['displayValue'],

'firstMeaningfulPaint': lighthouse['audits']['first-meaningful-paint']['displayValue'],

}

return result

} Note: If you want to know more about all the functions that were used there’s more information here Disclaimer(s)

- !!! The code is not production ready yet. So it might crash. We're continuing to work on it.

- !!! The URL must be valid and in standard format (including https://)

- !!! The runtime of the code is dependable on your computer and, the more URLs, the more time it will take.

- !!! This automation procedure still has not been sent to Google for approval by Google yet, and if you are worried about security, you can build your own script.